Hawarp is a set of tools built on top of the web archive framework JWAT for processing web archive records. It is based on Apache Hadoop to enable distributed processing using computer clusters.

Tools in Hawarp are:

- Hawarp/droid-identify and hawarp/tika-identify, native MapReduce implementations which directly process web archive records in a distributed manner using a Hadoop cluster.

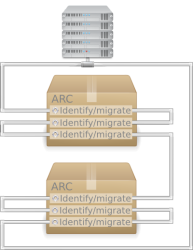

- Arc2warc-migration-cli, a standalone command line tool which is designed to be used by ToMaR to allow the parallelised processing on a computer cluster.

- Tomar-prepare-inputdata allows preparing web archive container files in ARC or WARC format in a way so the individual files, wrapped in web archive records, can be processed by ToMaR.

- Unpack2temp-identify unpacks ARC or WARC container files to the temporary local file system of cluster nodes in order to run various file format identification tools afterwards.

- Cdx-creator creates CDX index files which are required if the wayback software is used to display archived resources contained in ARC or WARC container files.

What is Hawarp?

Hawarp is a set of tools which helps you to

- proces web archive data by means of the Hadoop framework

- prepare your ARC or WARC container files and process these in ToMaR.

What are the benefits?

Hawarp allows you to:

- Extract file format identification information of individual files contained in ARC or WARC files

- Migrate very large data sets from ARC to WARC

- Extract files from ARC or WARC records to apply property extraction

- Run a set of file format identification/characterisation tools on ARC or WARC.

More information

http://hawarp.openplanetsfoundation.org